|

|

--- |

|

|

tags: |

|

|

- gguf-connector |

|

|

widget: |

|

|

- text: a cat in a hat |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w8g.png |

|

|

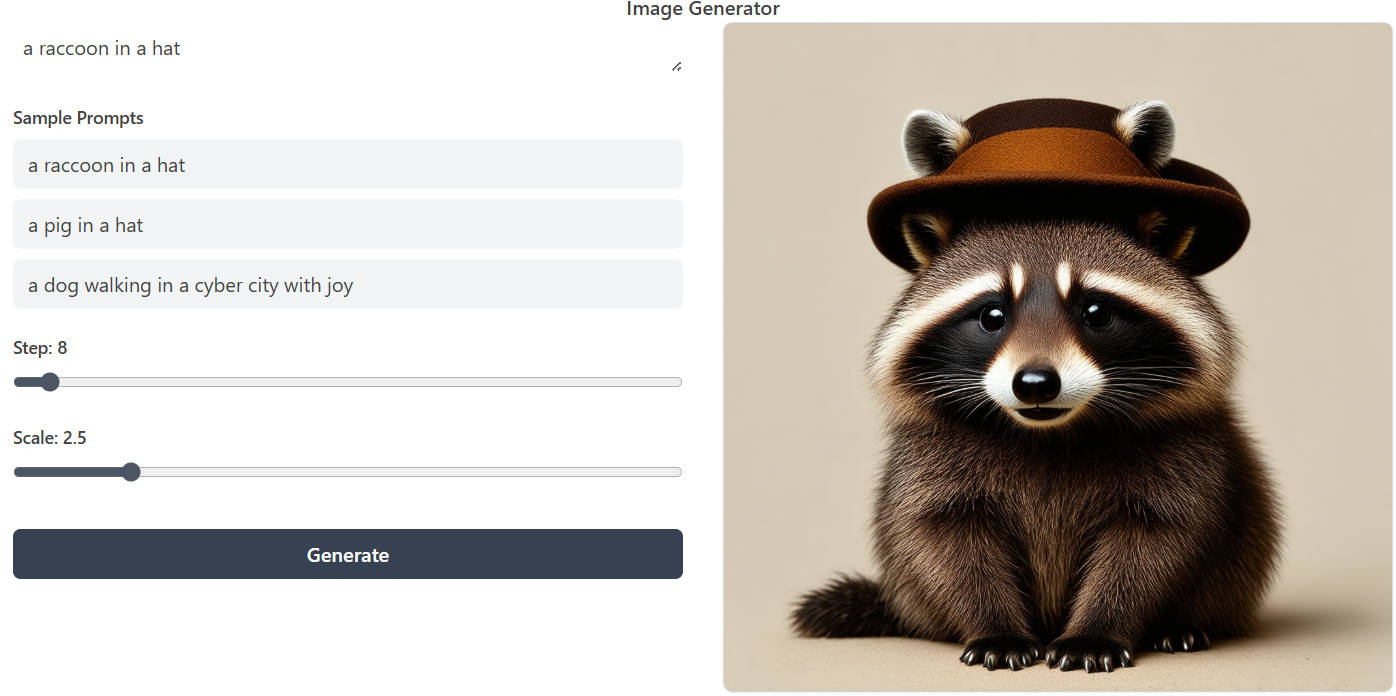

- text: a raccoon in a hat |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w8f.png |

|

|

- text: a raccoon in a hat |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w6a.png |

|

|

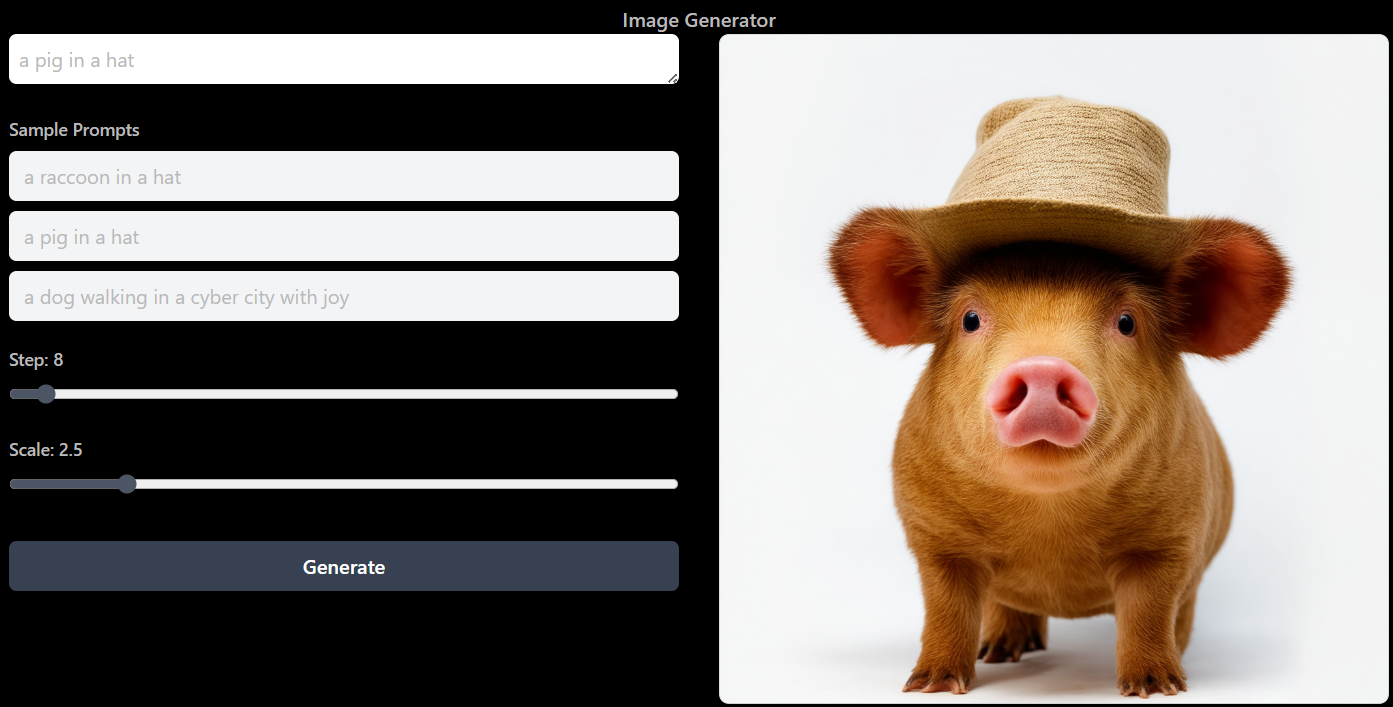

- text: a dog walking in a cyber city with joy |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w6b.png |

|

|

- text: a dog walking in a cyber city with joy |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w6c.png |

|

|

- text: a dog walking in a cyber city with joy |

|

|

output: |

|

|

url: https://raw.githubusercontent.com/calcuis/gguf-pack/master/w8e.png |

|

|

--- |

|

|

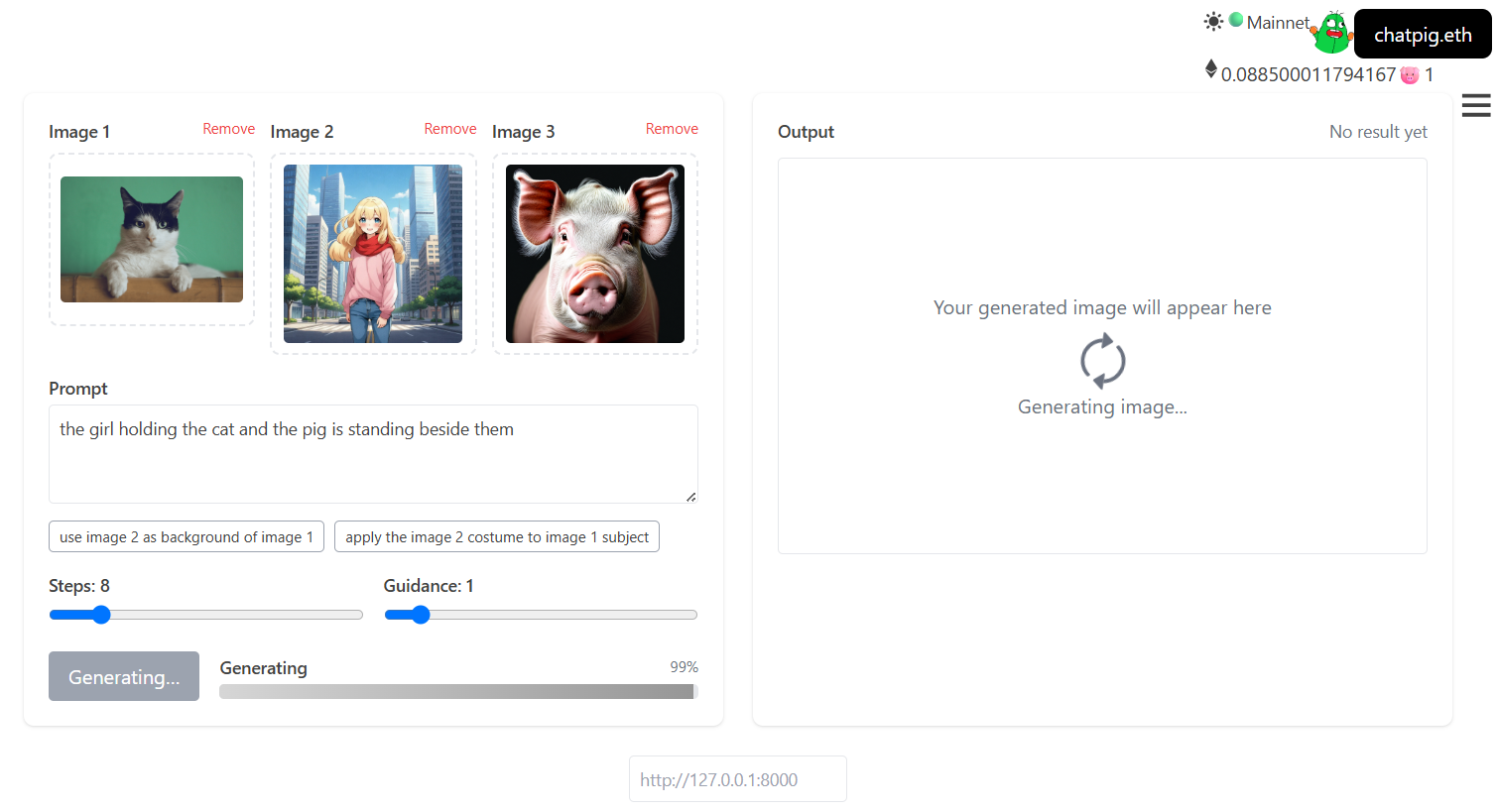

## self-hosted api |

|

|

- run it with `gguf-connector`; activate the backend in console/terminal by |

|

|

``` |

|

|

ggc w8 |

|

|

``` |

|

|

- choose your model* file |

|

|

> |

|

|

>GGUF available. Select which one to use: |

|

|

> |

|

|

>1. sd3.5-2b-lite-iq4_nl.gguf [[1.74GB](https://huggingface.co/calcuis/sd3.5-lite-gguf/blob/main/sd3.5-2b-lite-iq4_nl.gguf)] |

|

|

>2. sd3.5-2b-lite-mxfp4_moe.gguf [[2.86GB](https://huggingface.co/calcuis/sd3.5-lite-gguf/blob/main/sd3.5-2b-lite-mxfp4_moe.gguf)] |

|

|

> |

|

|

>Enter your choice (1 to 2): _ |

|

|

> |

|

|

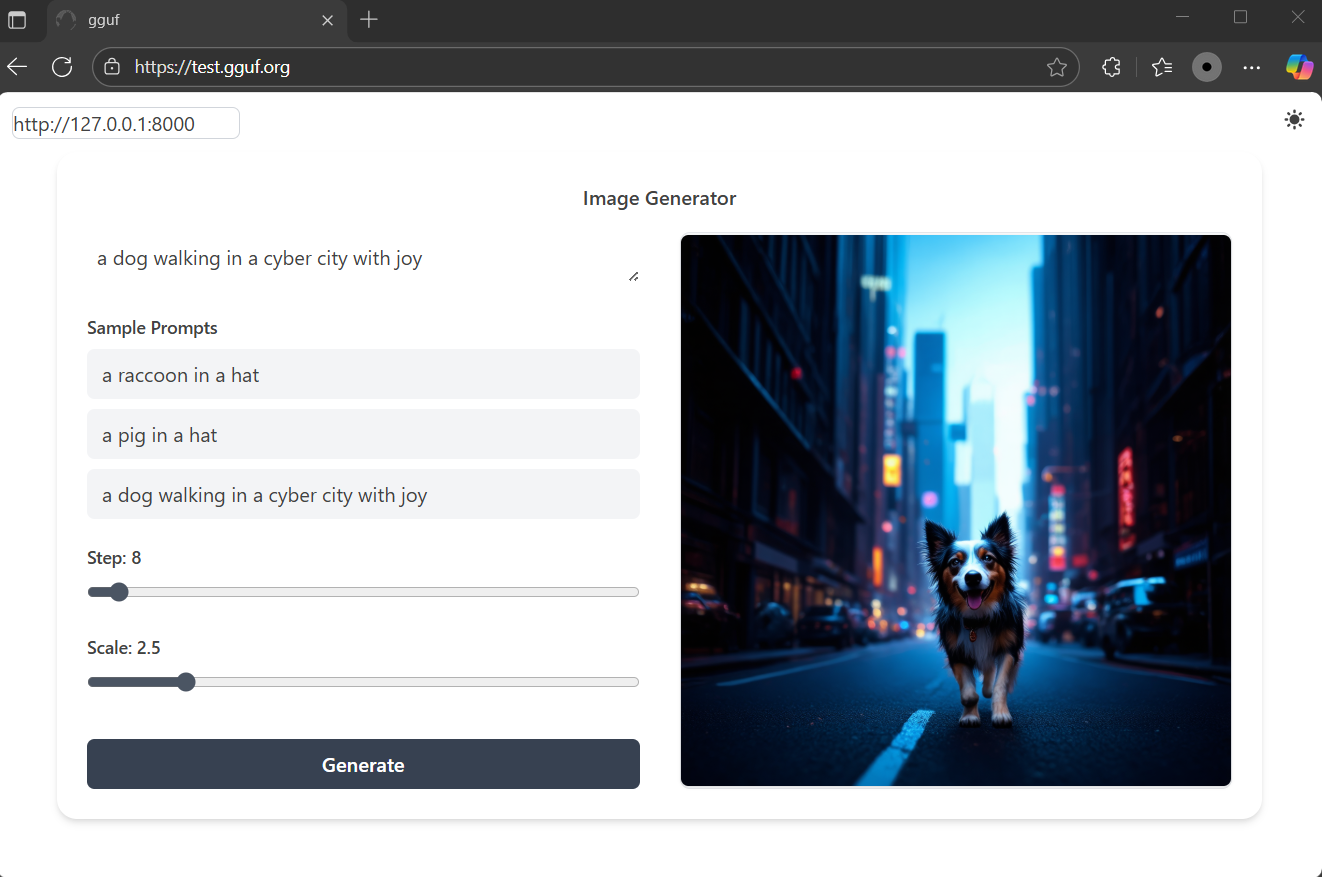

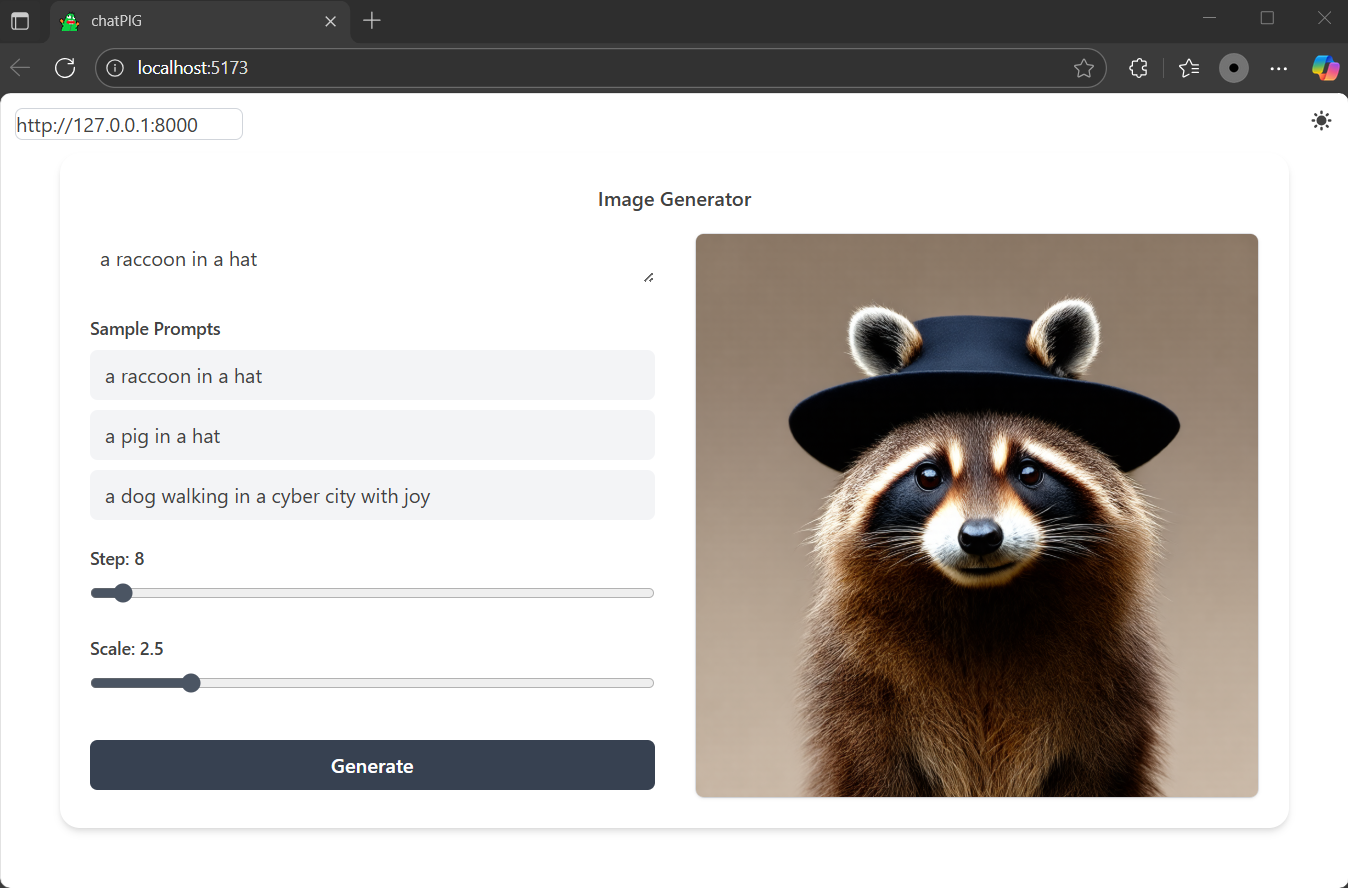

*accept sd3.5 2b model gguf recently, this will give you the fastest experience for even low tier gpu; frontend https://test.gguf.org or localhost (see **decentralized frontend** section below) |

|

|

|

|

|

|

|

|

|

|

|

- or opt fastapi **lumina** connector |

|

|

``` |

|

|

ggc w7 |

|

|

``` |

|

|

- choose your model* file |

|

|

> |

|

|

>GGUF available. Select which one to use: |

|

|

> |

|

|

>1. lumina2-q4_0.gguf [[1.47GB](https://huggingface.co/calcuis/lumina-gguf/blob/main/lumina2-q4_0.gguf)] |

|

|

>2. lumina2-q8_0.gguf [[2.77GB](https://huggingface.co/calcuis/lumina-gguf/blob/main/lumina2-q8_0.gguf)] |

|

|

> |

|

|

>Enter your choice (1 to 2): _ |

|

|

> |

|

|

*as lumina is no lite version recently, might need to increase the step to around 25 for better output |

|

|

|

|

|

- or opt fastapi **flux** connector |

|

|

``` |

|

|

ggc w6 |

|

|

``` |

|

|

- choose your model* file |

|

|

> |

|

|

>GGUF available. Select which one to use: |

|

|

> |

|

|

>1. flux-dev-lite-q2_k.gguf [[4.08GB](https://huggingface.co/calcuis/krea-gguf/blob/main/flux-dev-lite-q2_k.gguf)] |

|

|

>2. flux-krea-lite-q2_k.gguf [[4.08GB](https://huggingface.co/calcuis/krea-gguf/blob/main/flux-krea-lite-q2_k.gguf)] |

|

|

> |

|

|

>Enter your choice (1 to 2): _ |

|

|

> |

|

|

*accept any flux model gguf, lite is recommended for saving loading time |

|

|

|

|

|

|

|

|

|

|

|

- flexible frontend choice (see below) |

|

|

|

|

|

## decentralized frontend |

|

|

- option 1: navigate to https://test.gguf.org |

|

|

|

|

|

|

|

|

|

|

|

- option 2: localhost; keep the backend running and open a new terminal session then execute |

|

|

``` |

|

|

ggc b |

|

|

``` |

|

|

|

|

|

|

|

|

<Gallery /> |

|

|

|

|

|

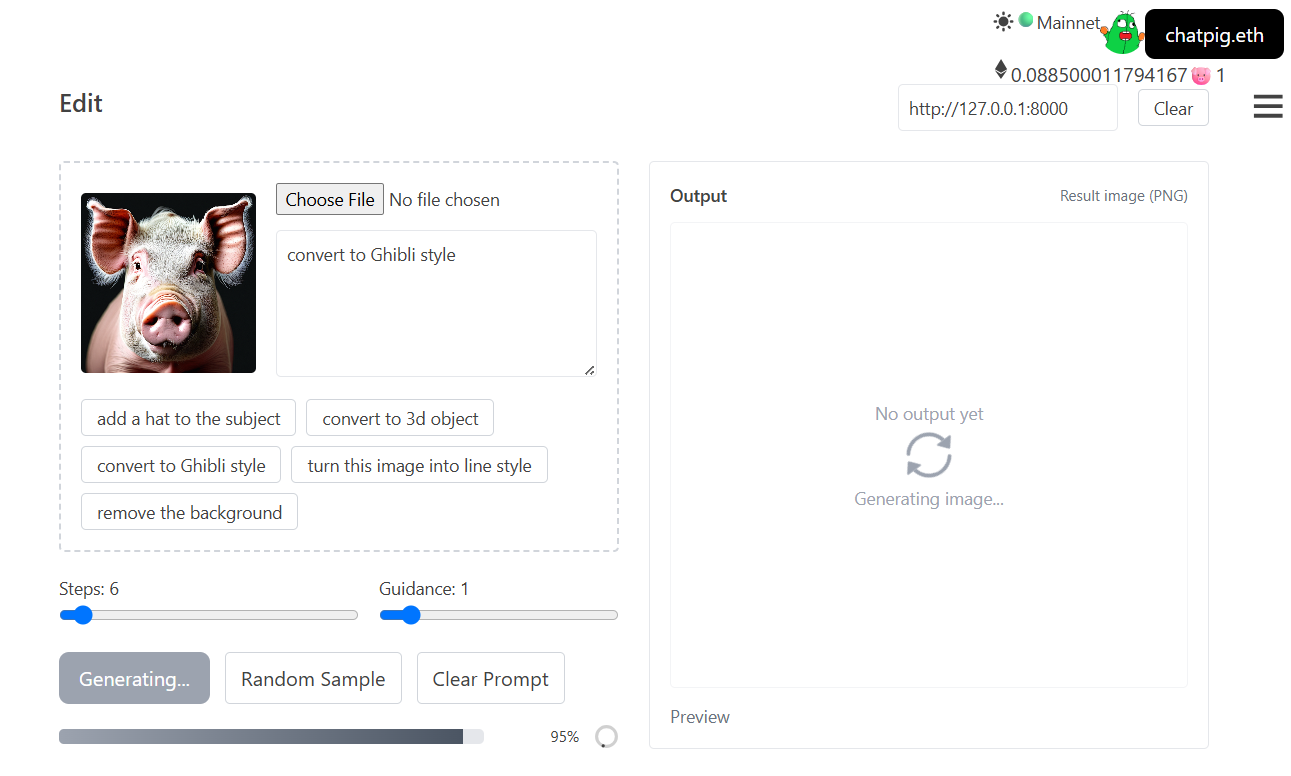

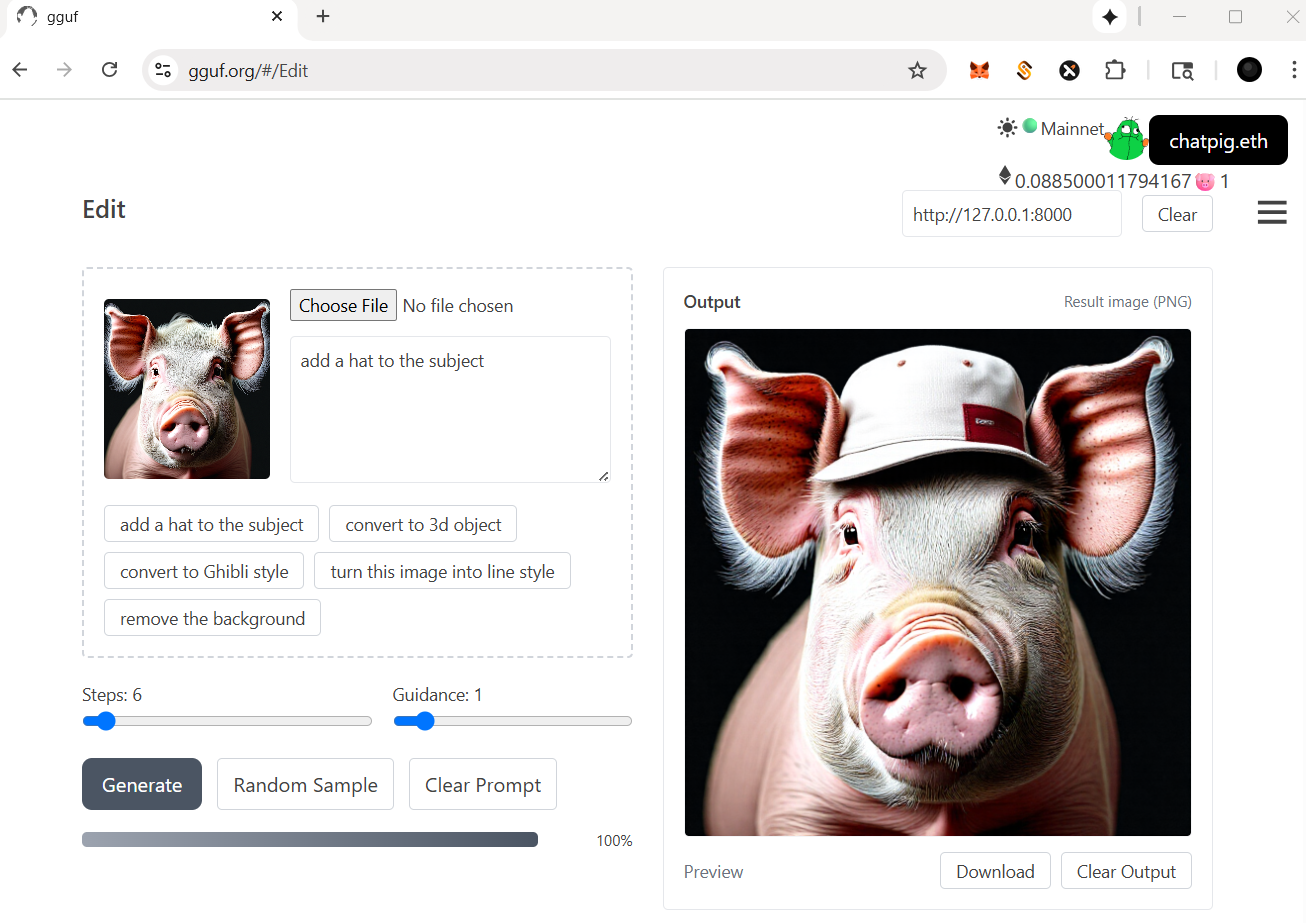

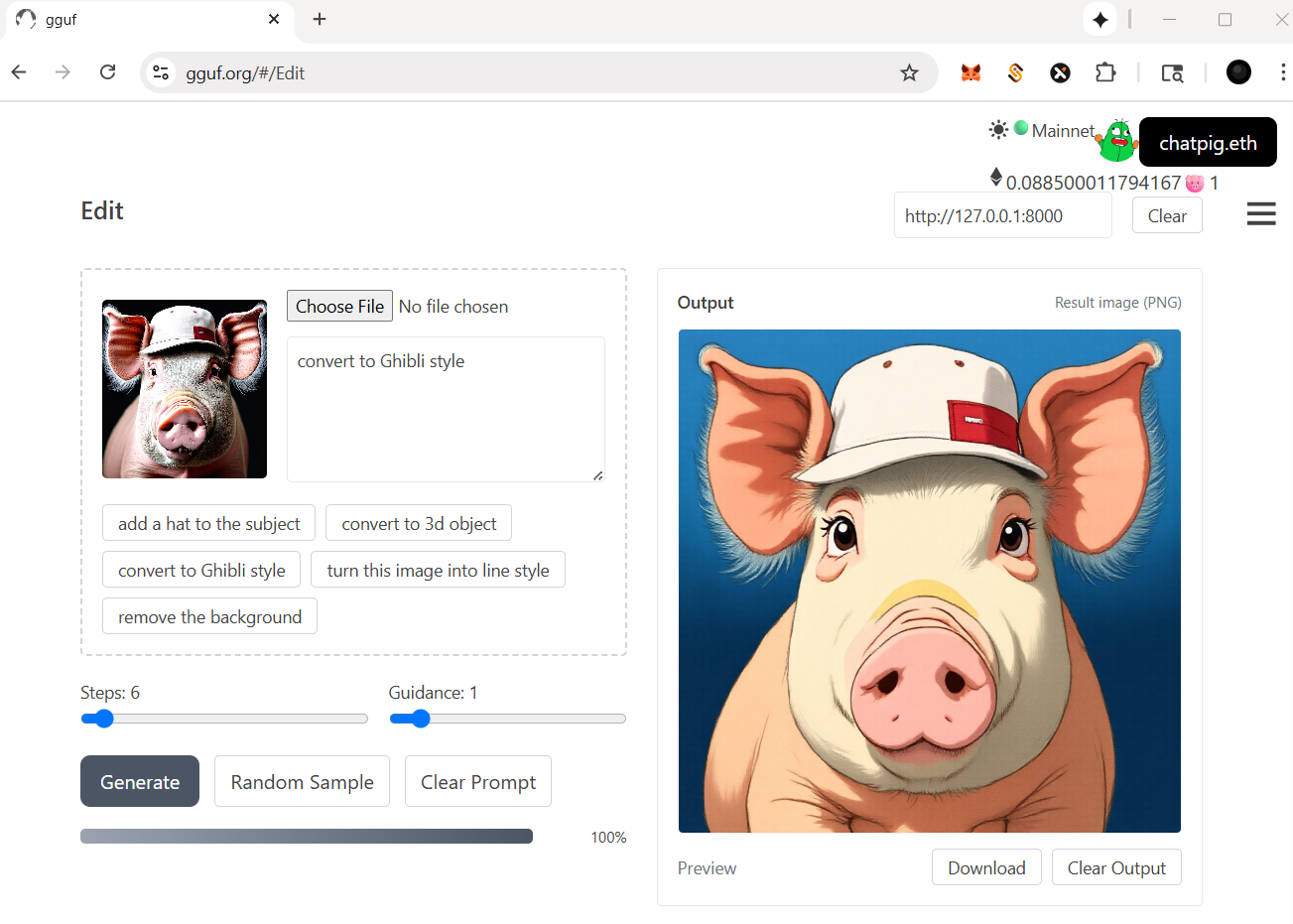

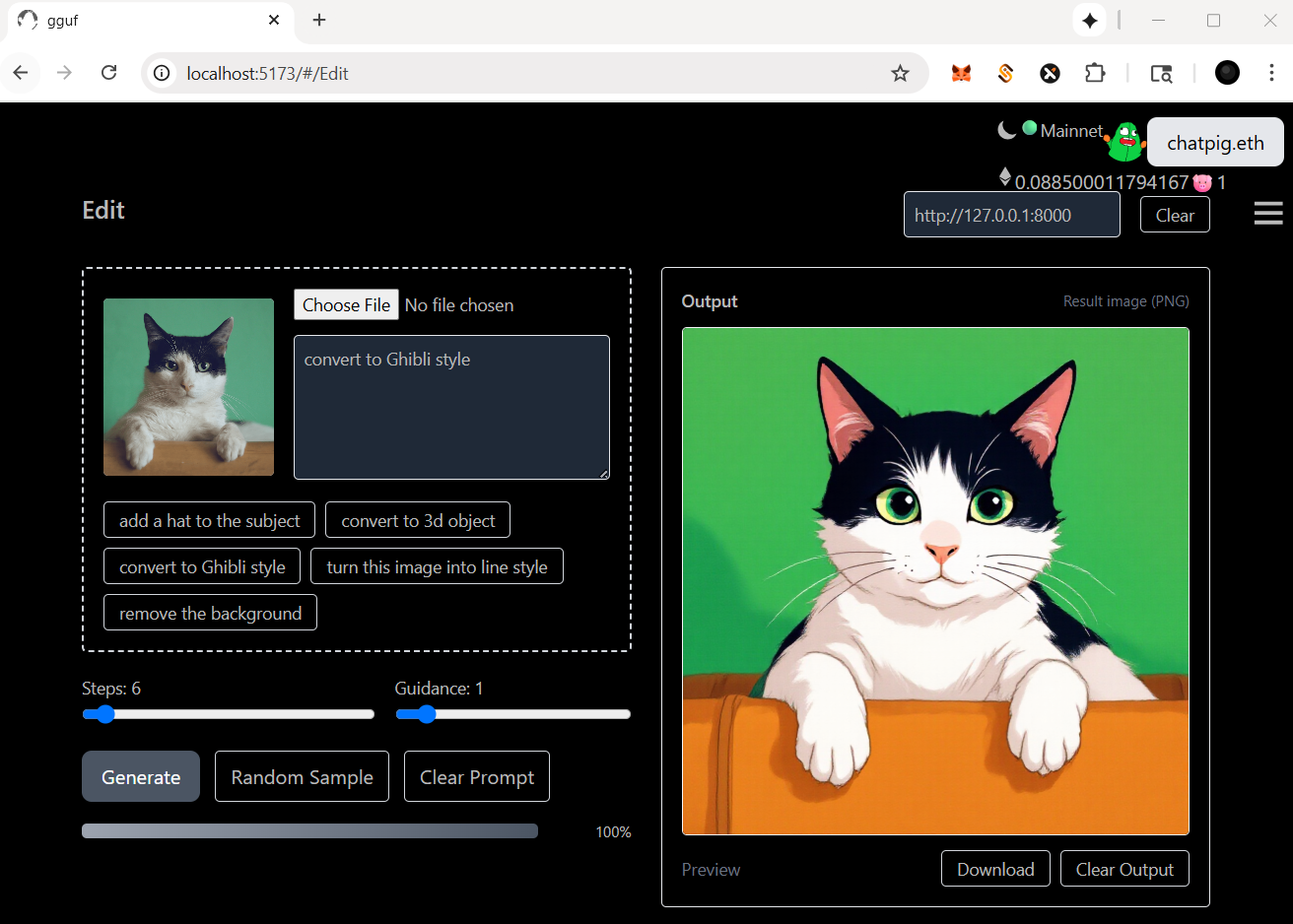

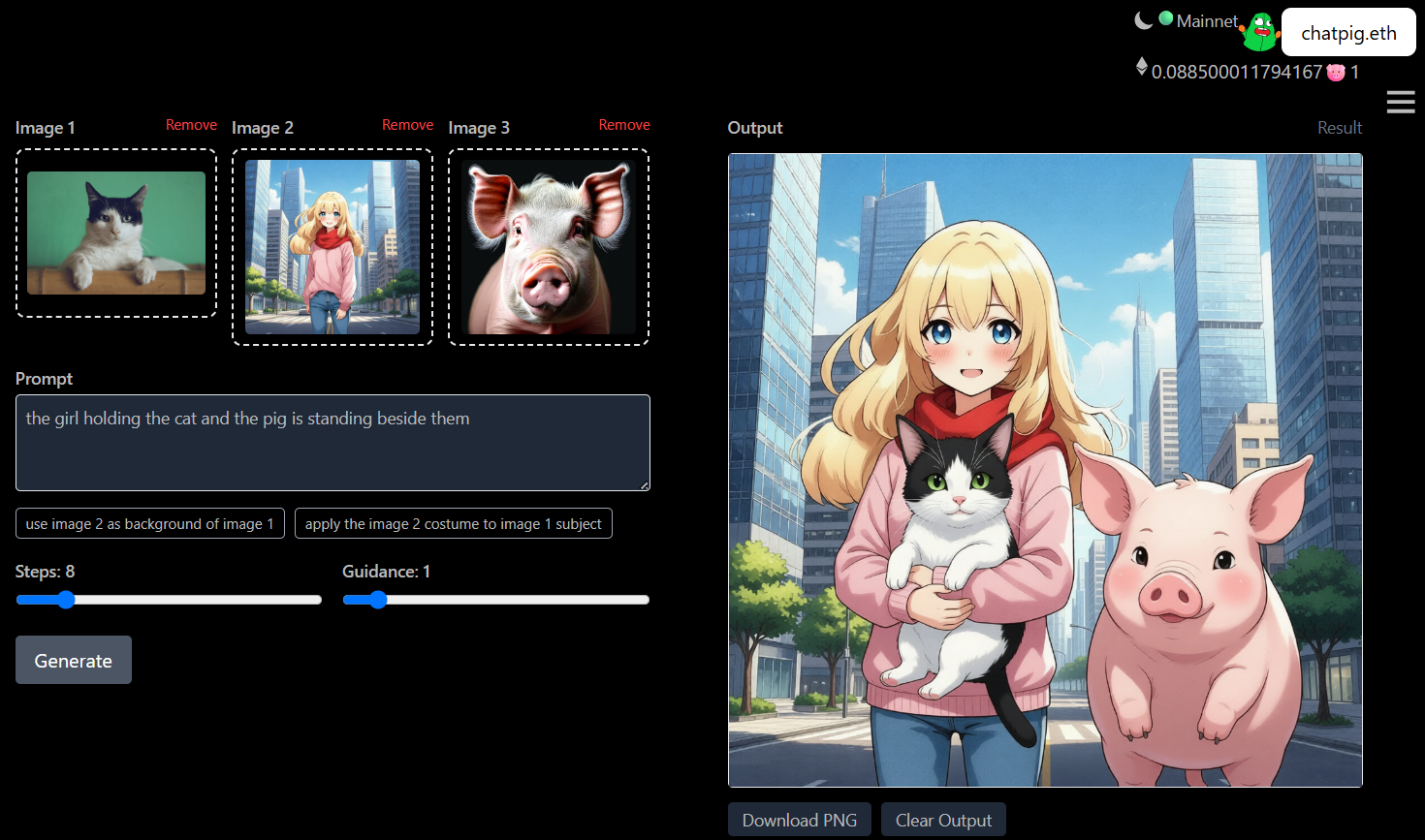

## self-hosted api (edit) |

|

|

- run it with `gguf-connector`; activate the backend in console/terminal by |

|

|

``` |

|

|

ggc e8 |

|

|

``` |

|

|

- choose your model file |

|

|

> |

|

|

>GGUF available. Select which one to use: |

|

|

> |

|

|

>1. flux-kontext-lite-q2_k.gguf [[4.08GB](https://huggingface.co/calcuis/kontext-gguf/blob/main/flux-kontext-lite-q2_k.gguf)] |

|

|

> |

|

|

>Enter your choice (1 to 1): _ |

|

|

> |

|

|

|

|

|

|

|

|

|

|

|

## decentralized frontend - opt `Edit` from pulldown menu (stage 1: exclusive for 🐷 holder trial recently) |

|

|

- option 1: navigate to https://gguf.org |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

- option 2: localhost; keep the backend running and open a new terminal session then execute |

|

|

``` |

|

|

ggc a |

|

|

``` |

|

|

|

|

|

|

|

|

## self-hosted api (plus) |

|

|

- run it with `gguf-connector`; activate the backend in console/terminal by |

|

|

``` |

|

|

ggc e9 |

|

|

``` |

|

|

- choose your model file |

|

|

> |

|

|

>Safetensors available. Select which one to use: |

|

|

> |

|

|

>1. sketch-s9-20b-fp4.safetensors (for blackwell card [11.9GB](https://huggingface.co/calcuis/sketch/blob/main/sketch-s9-20b-fp4.safetensors)) |

|

|

>2. sketch-s9-20b-int4.safetensors (for non-blackwell card [11.5GB](https://huggingface.co/calcuis/sketch/blob/main/sketch-s9-20b-int4.safetensors)) |

|

|

> |

|

|

>Enter your choice (1 to 2): _ |

|

|

> |

|

|

|

|

|

## decentralized frontend - opt `Plus` from pulldown menu (stage 1: exclusive for 🐷 holder trial recently) |

|

|

- option 1: navigate to https://gguf.org |

|

|

|

|

|

|

|

|

|

|

|

- option 2: localhost; keep the backend running and open a new terminal session then execute |

|

|

``` |

|

|

ggc a |

|

|

``` |

|

|

|

|

|

|