🚀 VLQM-1.5B-Coder

A fine-tuned language model for generating Manim animation code from natural language descriptions.

📖 Model Description

VLQM-1.5B-Coder is a specialized language model fine-tuned to generate Manim Python code from natural language descriptions. Manim is the mathematical animation engine famously used by 3Blue1Brown.

What This Model Does

Given a natural language prompt:

"Create a blue circle that moves to the right"

The model generates valid Manim Python code:

from manim import *

class GenScene(Scene):

def construct(self):

circle = Circle(color=BLUE)

self.add(circle)

self.play(circle.animate.shift(RIGHT * 3))

🎯 Intended Use

- Primary Use: Generating Manim animation code from text descriptions

- Users: Developers, educators, content creators making math/science animations

- Languages: English prompts, Python code output

Example Use Cases

- Creating educational math animations

- Generating visualizations for presentations

- Prototyping Manim scenes quickly

- Learning Manim syntax through examples

📊 Training Details

| Property | Value |

|---|---|

| Base Model | Qwen/Qwen2.5-Coder-1.5B-Instruct |

| Parameters | 1.5 Billion |

| Training Method | LoRA (Low-Rank Adaptation) |

| Dataset | generaleoley/manim-codegen |

| Training Examples | 1,459 |

| Validation Examples | 163 |

| Training Framework | Apple MLX |

| Hardware | MacBook Pro (Apple Silicon) |

Hyperparameters

| Parameter | Value |

|---|---|

| Iterations | 300 |

| Batch Size | 2 |

| Gradient Accumulation | 8 |

| Learning Rate | 5e-5 |

| LoRA Layers | 16 |

| Max Sequence Length | 8,192 |

📈 Model Performance

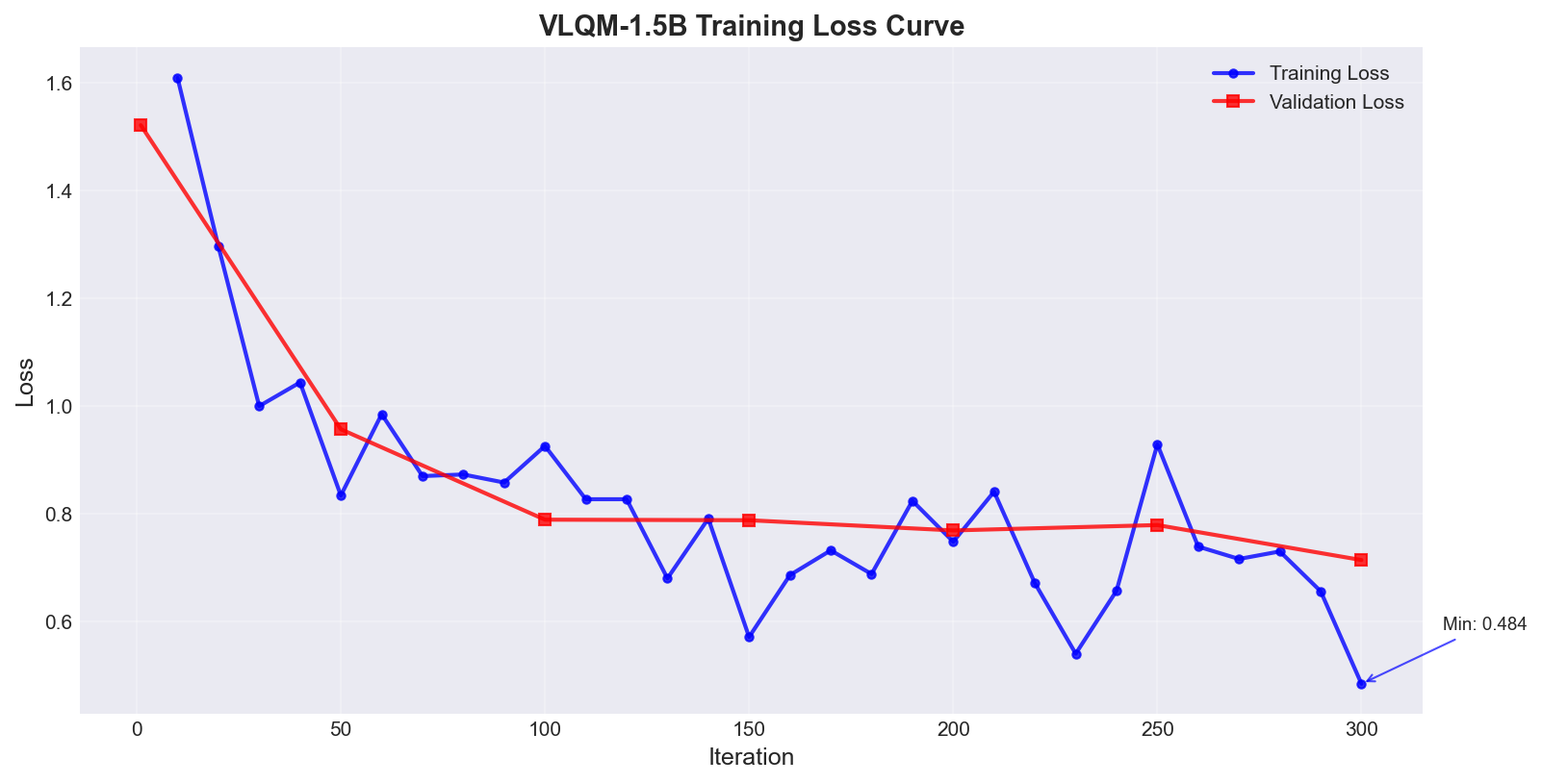

Training Loss Curve

The model shows a strong convergence pattern, with validation loss stabilizing around 0.71 and training loss reaching 0.48.

⚠️ Limitations

Known Limitations

- Complex Animations: May struggle with multi-step animations involving many objects

- Advanced Manim Features: Less reliable with 3D scenes, complex graphs, or advanced camera movements

- API Hallucinations: Sometimes generates non-existent Manim methods (e.g.,

axes.get_sine()) - Indentation Issues: Occasionally produces incorrectly indented code

- Long Prompts: Performance degrades with very long or complex descriptions

What This Model is NOT

- ❌ Not a general-purpose code generator

- ❌ Not trained for non-Manim Python code

- ❌ Not suitable for production without human review

- ❌ Not a replacement for learning Manim fundamentals

Recommended Practices

- ✅ Always review and test generated code before use

- ✅ Use simple, clear prompts for best results

- ✅ Keep prompts focused on one animation at a time

- ✅ Be prepared to make minor edits to fix issues

🚀 Quick Start

Using with Transformers (Cross-Platform)

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained(

"vikramlingam/VLQM-1.5B-Coder",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained("vikramlingam/VLQM-1.5B-Coder")

prompt = "Create a red square that rotates"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

outputs = model.generate(**inputs, max_new_tokens=256)

print(tokenizer.decode(outputs[0]))

Using with MLX (Apple Silicon)

python -m mlx_lm.generate \

--model vikramlingam/VLQM-1.5B-Coder \

--prompt "Create a circle animation" \

--max-tokens 256

📁 Model Files

VLQM-1.5B-Coder/

├── config.json # Model configuration

├── model.safetensors # Model weights (~3 GB)

├── tokenizer.json # Tokenizer

├── tokenizer_config.json

├── vocab.json

├── merges.txt

├── generation_config.json

├── special_tokens_map.json

└── README.md # This file

📜 License

This model is released under the Apache 2.0 License.

- ✅ Commercial use allowed

- ✅ Modification allowed

- ✅ Distribution allowed

- ⚠️ Must include license and copyright notice

🙏 Acknowledgments

- Base Model: Qwen/Qwen2.5-Coder-1.5B-Instruct by Alibaba

- Dataset: generaleoley/manim-codegen

- Training Framework: Apple MLX

- Animation Engine: Manim Community

📬 Citation

If you use this model, please cite:

@misc{vlqm-1.5b-coder,

author = {Vikram Lingam},

title = {VLQM-1.5B-Coder: Manim Code Generation Model},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/vikramlingam/VLQM-1.5B-Coder}

}

📊 Model Card Contact

For questions or issues, please open a discussion on the model's Hugging Face page.

- Downloads last month

- 25

Model tree for vikramlingam/VLQM-1.5B-Coder

Base model

Qwen/Qwen2.5-1.5B

Finetuned

Qwen/Qwen2.5-Coder-1.5B

Finetuned

Qwen/Qwen2.5-Coder-1.5B-Instruct